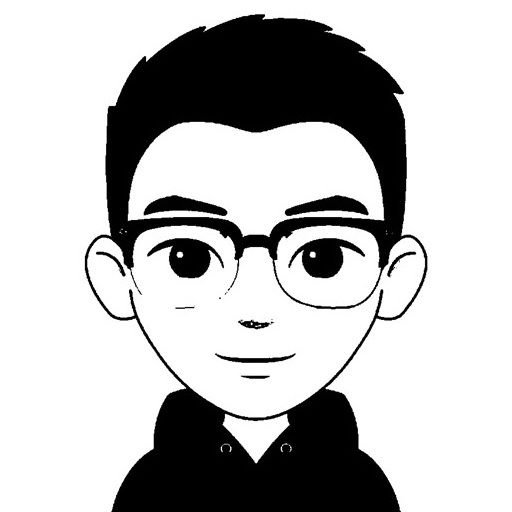

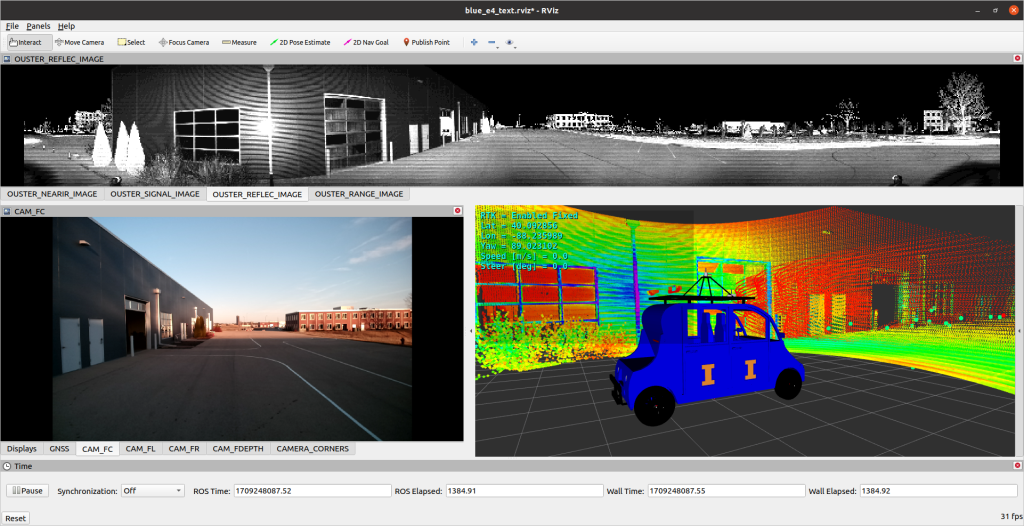

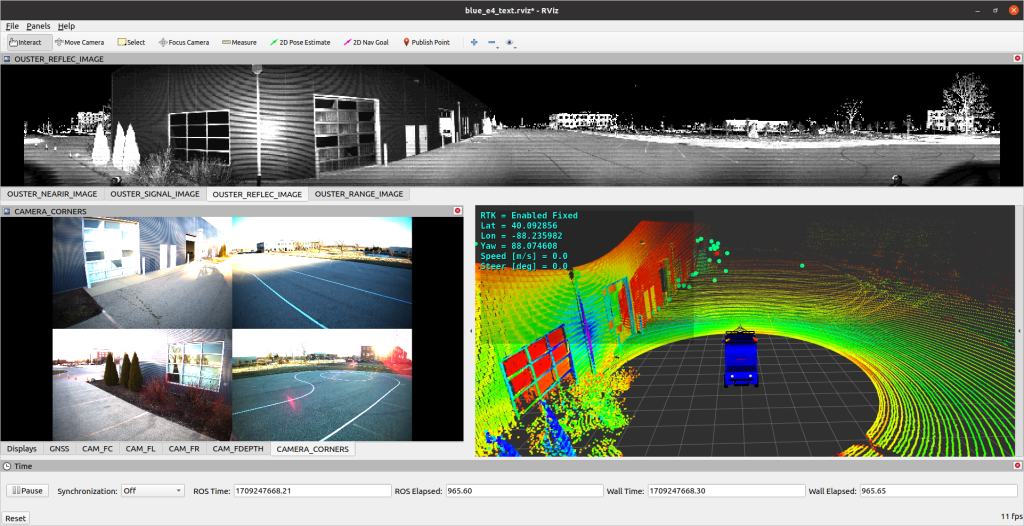

I led the integration of various sensors—including LiDAR, camera, GNSS/IMU, and 4D automotive radar—on this electric self-driving vehicle from Jan. 2024 to Mar. 2024. I also configured the sensors using their respective SDKs. This vehicle supports both ROS Noetic (ROS1) and ROS Humble (ROS2) for seamless operation.

The ultimate goal of my work at the Center for Autonomy is to develop an autonomous driving vehicle with Level 3/4 capabilities and a corresponding software stack. I plan to begin by deploying the open-source Autoware.Auto software to the vehicle. From there, key modules such as perception, localization and mapping, planning, and control will be further developed, customized, and refined. Stay tuned for future updates!

Back to top of the page